- The Idea Catalyst

- Posts

- The Advent of New Experiences

The Advent of New Experiences

Apple's Liquid Glass and Beyond... Way Beyond

Hey there—hope your week’s going well!

We’re halfway through the year and it’s the perfect time to pause, reflect and realign! In the past few months, I’ve reconnected with some of you (which I’m thankful for) and I always get inspired by you too! Speaking with you has allowed me to course correct or double-down.

Here’s what you can review:

✅ What goals did you have at the start of the year?

🚀 What’s working, and what isn’t?

🧠 What have you learnt so far?

🔁 What needs to be adjusted for the next 6 months?

Don’t waste the next 6 months, because soon, it’ll be 2026. Take 30 minutes, get these done and get better! Now, onto the main stuff—new experiences, beyond interfaces.

Liquid Glass ⚪️

Just earlier this week, Apple has announced iOS 26 — we’re now going back to the 90s way of naming (e.g. Window 95, Windows 98… iykyk) and we’re also kinda, sorta going back to the 2000s where Apple is known for it’s Aqua user interface, and Microsoft had its version called Aero.

These glossy buttons ran the show during that period of time and it added depth and layers to digital interfaces. However, we moved on towards the flat UI look. Balancing between accessibility and interfaces moved everyone towards a “safe zone” where it’s non-disruptive, but at the same time, took away the excitement of interacting with a digital interface. My first reaction when I saw the preview was “Wow, Apple just threw accessibility out of the window…” And then when I saw them talking about VisionOS, I thought about the glasses that Google announced and I thought if Apple was trying to do something early and test it with the public and adjust it accordingly.

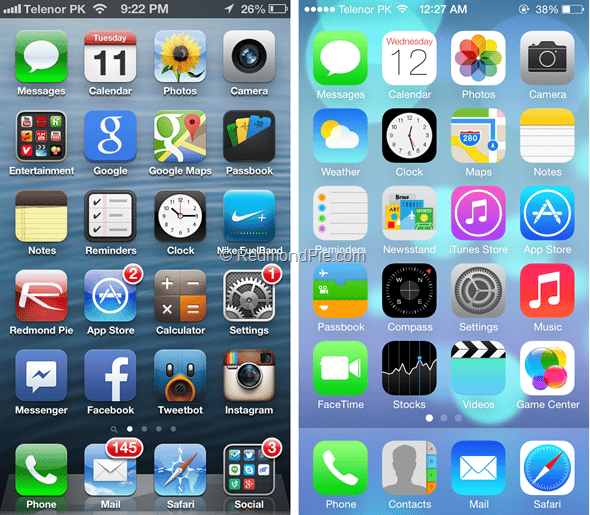

iOS 7 - 12 Years Ago

iOS 6 (left), iOS 7 (right)

As you would probably remember, we got quite sick of skeuomorphic designs very quickly (within a span of 5 years), ushering in the ‘flat design’ era that guided most designers the way we design interfaces today. We felt that these digital interfaces did not need to reflect real world gloss and shadows and chose simplicity over this. iOS 7 from Apple did just that. So did Window 8 with their “Metro” design. However, the next criticism came—everything is too flat. People don’t know what is clickable now. It took Apple 4 years to fix the interface, and changed the antenna dots back to bars. They did the same with the parallax effect in iOS—which was used to communicate depth, where it caused some people to have motion sickness from using their phones, with updates that reduced the motion. I would presume that Apple would do the same here. Test, try, let the consumers complain (or feedback, if you will), and fix. However, I do think that Apple’s Liquid glass is here to stay—at least for the next 5 years.

Is Apple Signalling Something? 🤔

With the advent of AI and spatial computing, we’ve seen companies like Meta working with Ray Ban to create the Ray Ban Meta AI glasses, and Apple with devices like Vision Pro, and other companies such as Even Realities’ G1 glasses, and even Google working with Gentle Monster and Warby Parker to create smart glasses powered by AI.

Is Apple training us to be more “glass-ready”?

I personally have the Ray Ban Metas and I have enjoyed the use of voice commands. However, there’s speculation that Meta would announce a new version this year with an in-lens display There have also been announcements from Google with their XR glasses that would include an in-lens display. This highly suggests the possible reason of Apple’s new Liquid Glass UI—to train us for an “Apple Glass”?

I guess my question to this new-ish design overhaul into a glass interface—whether it is actually needed, and whether or not this forced perspective to use this glass interface makes sense from a consumer standpoint. Should I be “adopting” this new look because of what it might usher in? Or should there still be a distinct difference between touch interfaces and and glass interfaces. You can read more about my thoughts at the end of this newsletter.

Where’s All This Going? 💝

Now, I spoke of using voice commands to do tasks, questions to get answered and so on with my Ray Ban Meta glasses, but the experience is not built solely on how the product answers your questions. As consumers, we also measure how responsive it is, how accurate the answers are, how effective it performs—all these build trust. And this happens in a split-second in our brains.

Trust is the currency for all consumer relationships. Without it, there’s no transaction—only hesitation. When we buy or consume a product, we look out for trust signals. However, with businesses and even consumers using AI, there’s a shift in how we consume, and how businesses serve its customers.

Understanding the differences would allow you to design these experiences and strike a balance between emotional intuition and computational logic. Let’s break this down side by side:

Signals & Factors | Human Psychology | Artificial Intelligence |

Basis of Action | Emotions, instincts, experiences, social proof | Data, probabilities, logic, and pattern recognition |

Trust Mechanism | Built through relationships, consistency, vulnerability, empathy | No concept of trust. Operates regardless of emotional context |

Perception of Risk | Humans weigh risk emotionally—“Will I regret this?” | AI weighs risk statistically—“What is the likelihood of failure?” |

Adaptability | Can adapt based on context, mood, or personal principles | Adapts only based on data inputs and training—doesn’t have principles or feelings |

Pattern Recognition | Recognises patterns but is influenced by emotion and cognitive bias | Highly efficient at spotting patterns across vast datasets |

Consistency | Can be inconsistent—mood, fatigue, past experience affect decisions | Consistent based on model logic, assuming consistent inputs |

Social Proof | Trusts what others say or do, even irrationally (herd behaviour) | Doesn't care about others’ opinions unless programmed to incorporate popularity data |

Emotional Intelligence | Feels empathy, builds rapport, picks up on subtle emotional cues | Can simulate empathy, but doesn’t feel—emotion recognition is statistical only |

Forgiveness | Forgives based on loyalty, empathy, or narrative | Cannot forgive. Only updates its behaviour if trained to do so |

Authority Influence | Humans trust authority, branding, uniforms, tone of voice | Doesn’t recognise authority—treats all inputs equally unless weighted manually |

If trust is the key currency in consumer behaviour, AI would be the proxy for trust—not substitute.

The Shift 👀

Machines don’t feel empathy, they calculate—and the fact is that most of us are interacting with machines daily. AI today is given the autonomy as decision systems to do things on behalf of us. As such, a degree of trust needs to happen. As designers, we need to start shifting our minds to build trust instead of interfaces. As consumers, even as we give control to AI, we want to know what they are doing—their role needs to be explicit, while still giving users control through feedback, override suggestions or even adjusting the level AI’s involvement.

With that in mind, there also a need to surface and provide clarity with the ‘Why’, because people trust what they understand. For example, in a smart AI glass that you are wearing could ask you, ‘Care to pause and take a mindful break? This is recommended based on your sleep and stress patterns in the past week.’ You’re given the ‘Why’, and users are still in control whether they want to proceed with the break or just ignore it. This experience builds trust, but this doesn’t have any interface build along with it.

It might be a little difficult to understand, but you can create an exercise for yourself to re-imagine how this would be—without any limitations. Note them down and tie it back to where it is today, and you might have a way to make this vision a reality.

Reflect: This, perhaps might be the new digital frontier that might just change the way we do things, which would shape the way these experiences are created. I would suggest that you explore these ideas of building trust experiences across these new advancements and products.

Mentor’s Notes 🗒️

My stance for this is to approach with tempered optimism; skeptically hopeful—encouraged but not convinced. Apple has failed in many aspects starting from iOS 7, where they have had to backtrack some of their changes like the antenna signal dots where it was harshly criticised. As such, being designers or even product managers, you should be weighing the vision of these giant tech companies, and think about how these AI uses could evolve, and be more embedded in our daily lives. With that in mind, bring this into how you can start supporting some of these “use cases” and test out how you can evolve a product. This would help you make more informed choices as the mass would start adopting, and you, already at the forefront, shaping the future.

P.S. Insightful? Helpful? Or perhaps, an interesting read? Share it with your friends or colleagues and talk about it. It might just be your catalyst to designing your next big thing.